Summation

Summation is the operation of adding a sequence of numbers; the result is their sum or total. If numbers are added sequentially from left to right, any intermediate result is a partial sum, prefix sum, or running total of the summation. The numbers to be summed may be integers, rational numbers, real numbers, or complex numbers. Besides numbers, other types of values can be added as well: vectors, matrices, polynomials and, in general, elements of any additive group (or even monoid). For finite sequences of such elements, summation always produces a well-defined sum (possibly by virtue of the convention for empty sums).

Summation of an infinite sequence of values is not always possible, and when a value can be given for an infinite summation, this involves more than just the addition operation, namely also the notion of a limit. Such infinite summations are known as series. Another notion involving limits of finite sums is integration. The term summation has a special meaning related to extrapolation in the context of divergent series.

The summation of the sequence [1, 2, 4, 2] is an expression whose value is the sum of each of the members of the sequence. In the example,1 + 2 + 4 + 2 = 9. Since addition is associative the value does not depend on how the additions are grouped, for instance (1 + 2) + (4 + 2) and 1 + ((2 + 4) + 2) both have the value 9; therefore, parentheses are usually omitted in repeated additions. Addition is also commutative, so permuting the terms of a finite sequence does not change its sum (for infinite summations this property may fail; see absolute convergence for conditions under which it still holds).

There is no special notation for the summation of such explicit sequences, as the corresponding repeated addition expression will do. There is only a slight difficulty if the sequence has fewer than two elements: the summation of a sequence of one term involves no plus sign (it is indistinguishable from the term itself) and the summation of the empty sequence cannot even be written down (but one can write its value "0" in its place). If, however, the terms of the sequence are given by a regular pattern, possibly of variable length, then a summation operator may be useful or even essential. For the summation of the sequence of consecutive integers from 1 to 100 one could use an addition expression involving an ellipsis to indicate the missing terms: 1 + 2 + 3 + ... + 99 + 100. In this case the reader easily guesses the pattern; however, for more complicated patterns, one needs to be precise about the rule used to find successive terms, which can be achieved by using the summation operator "Σ". Using this notation the above summation is written as:

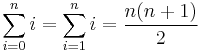

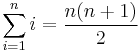

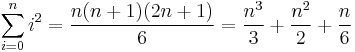

The value of this summation is 5050. It can be found without performing 99 additions, since it can be shown (for instance by mathematical induction) that

for all natural numbers n. More generally, formulas exist for many summations of terms following a regular pattern.

The term "indefinite summation" refers to the search for an inverse image of a given infinite sequence s of values for the forward difference operator, in other words for a sequence, called antidifference of s, whose finite differences are given by s. By contrast, summation as discussed in this article is called "definite summation".

Contents |

Notation

Capital-sigma notation

Mathematical notation uses a symbol that compactly represents summation of many similar terms: the summation symbol ∑ (U+2211), an enlarged form of the upright capital Greek letter Sigma. This is defined thus:

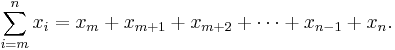

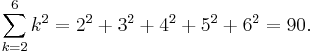

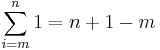

The subscript gives the symbol for an index variable, i. Here, i represents the index of summation; m is the lower bound of summation, and n is the upper bound of summation. Here i = m under the summation symbol means that the index i starts out equal to m. Successive values of i are found by adding 1 to the previous value of i, stopping when i = n. An example:

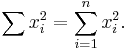

Informal writing sometimes omits the definition of the index and bounds of summation when these are clear from context, as in

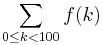

One often sees generalizations of this notation in which an arbitrary logical condition is supplied, and the sum is intended to be taken over all values satisfying the condition. For example:

is the sum of f(k) over all (integer) k in the specified range,

is the sum of f(x) over all elements x in the set S, and

is the sum of μ(d) over all positive integers d dividing n.[1]

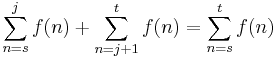

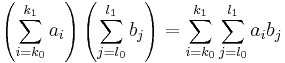

There are also ways to generalize the use of many sigma signs. For example,

is the same as

A similar notation is applied when it comes to denoting the product of a sequence, which is similar to its summation, but which uses the multiplication operation instead of addition (and gives 1 for an empty sequence instead of 0). The same basic structure is used, with ∏, an enlarged form of the Greek capital letter Pi, replacing the ∑.

Special cases

It is possible to sum fewer than 2 numbers:

- If the summation has one summand x, then the evaluated sum is x.

- If the summation has no summands, then the evaluated sum is zero, because zero is the identity for addition. This is known as the empty sum.

These degenerate cases are usually only used when the summation notation gives a degenerate result in a special case. For example, if m = n in the definition above, then there is only one term in the sum; if m > n, then there is none.

Formal Definition

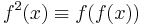

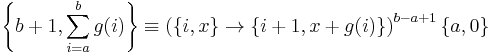

If the iterated function notation is defined e.g.  and is considered a more primitive notation then summation can be defined in terms of iterated functions as:

and is considered a more primitive notation then summation can be defined in terms of iterated functions as:

Where the curly braces define a 2-tuple and the right arrow is a function definition taking a 2-tuple to 2-tuple. The function is applied b-a+1 times on the tuple {a,0}.

Measure theory notation

In the notation of measure and integration theory, a sum can be expressed as a definite integral,

![\sum_{k=a}^b f(k) = \int_{[a,b]} f\,d\mu](/2012-wikipedia_en_all_nopic_01_2012/I/ff0ff2ec229624fa5c2fd9713343b188.png)

where [a,b] is the subset of the integers from a to b, and where μ is the counting measure.

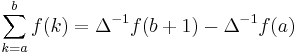

Fundamental theorem of discrete calculus

Indefinite sums can be used to calculate definite sums with the formula[2]:

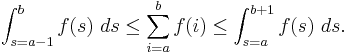

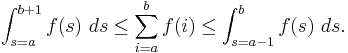

Approximation by definite integrals

Many such approximations can be obtained by the following connection between sums and integrals, which holds for any:

increasing function f:

decreasing function f:

For more general approximations, see the Euler–Maclaurin formula.

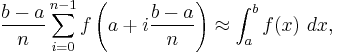

For summations in which the summand is given (or can be interpolated) by an integrable function of the index, the summation can be interpreted as a Riemann sum occurring in the definition of the corresponding definite integral. One can therefore expect that for instance

since the right hand side is by definition the limit for  of the left hand side. However for a given summation n is fixed, and little can be said about the error in the above approximation without additional assumptions about f: it is clear that for wildly oscillating functions the Riemann sum can be arbitrarily far from the Riemann integral.

of the left hand side. However for a given summation n is fixed, and little can be said about the error in the above approximation without additional assumptions about f: it is clear that for wildly oscillating functions the Riemann sum can be arbitrarily far from the Riemann integral.

Identities

The formulas below involve finite sums; for infinite summations see list of mathematical series

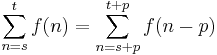

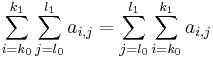

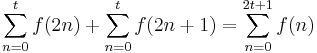

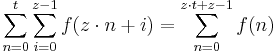

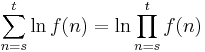

General manipulations

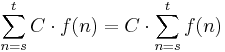

, where C is a constant

, where C is a constant

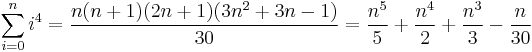

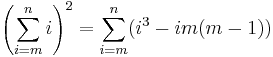

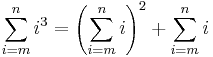

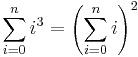

Some summations of polynomial expressions

(See Harmonic number)

(See Harmonic number)

(see arithmetic series)

(see arithmetic series)

(Special case of the arithmetic series)

(Special case of the arithmetic series)

where

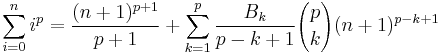

where  denotes a Bernoulli number

denotes a Bernoulli number

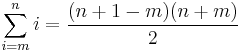

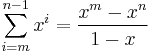

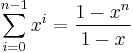

The following formulas are manipulations of  generalized to begin a series at any natural number value (i.e.,

generalized to begin a series at any natural number value (i.e.,  ):

):

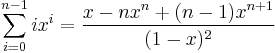

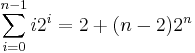

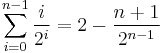

Some summations involving exponential terms

In the summations below x is a constant not equal to 1

(m < n; see geometric series)

(m < n; see geometric series)

(geometric series starting at 1)

(geometric series starting at 1)

(special case when x = 2)

(special case when x = 2)

(special case when x = 1/2)

(special case when x = 1/2)

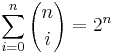

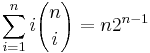

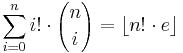

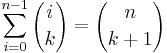

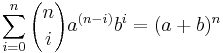

Some summations involving binomial coefficients

There exist enormously many summation identities involving binomial coefficients (a whole chapter of Concrete Mathematics is devoted to just the basic techniques). Some of the most basic ones are the following.

, the binomial theorem

, the binomial theorem

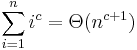

Growth rates

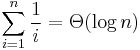

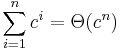

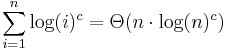

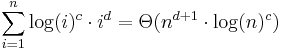

The following are useful approximations (using theta notation):

for real c greater than −1

for real c greater than −1 (See Harmonic number)

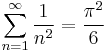

(See Harmonic number) for real c greater than 1

for real c greater than 1 for non-negative real c

for non-negative real c for non-negative real c, d

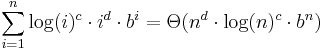

for non-negative real c, d for non-negative real b > 1, c, d

for non-negative real b > 1, c, d

See also

- Einstein notation

- Checksum

- Product (mathematics)

- Kahan summation algorithm

- Iterated binary operation

- Summation equation

- Basel problem –

Notes

- ^ Although the name of the dummy variable does not matter (by definition), one usually uses letters from the middle of the alphabet (i through q) to denote integers, if there is a risk of confusion. For example, even if there should be no doubt about the interpretation, it could look slightly confusing to many mathematicians to see x instead of k in the above formulae involving k. See also typographical conventions in mathematical formulae.

- ^ "Handbook of discrete and combinatorial mathematics", Kenneth H. Rosen, John G. Michaels, CRC Press, 1999, ISBN 0-8493-0149-1

Further reading

- Nicholas J. Higham, "The accuracy of floating point summation", SIAM J. Scientific Computing 14 (4), 783–799 (1993).

External links

- Media related to [//commons.wikimedia.org/wiki/Category:Summation Summation] at Wikimedia Commons

- Summation on PlanetMath

- Derivation of Polynomials to Express the Sum of Natural Numbers with Exponents

![\sum_{n=s}^t f(n) %2B \sum_{n=s}^{t} g(n) = \sum_{n=s}^t \left[f(n) %2B g(n)\right]](/2012-wikipedia_en_all_nopic_01_2012/I/207fc076b9b57a8845852e18ab6bfe8f.png)

![\sum_{n=s}^t f(n) - \sum_{n=s}^{t} g(n) = \sum_{n=s}^t \left[f(n) - g(n)\right]](/2012-wikipedia_en_all_nopic_01_2012/I/f46bc18b95a08cf74305e78855602b8f.png)

![c^{\left[\sum_{n=s}^t f(n) \right]} = \prod_{n=s}^t c^{f(n)}](/2012-wikipedia_en_all_nopic_01_2012/I/bbf5714f69ebad66b81ea4f0c6e99000.png)

![\sum_{i=0}^n i^3 = \left(\frac{n(n%2B1)}{2}\right)^2 = \frac{n^4}{4} %2B \frac{n^3}{2} %2B \frac{n^2}{4} = \left[\sum_{i=1}^n i\right]^2](/2012-wikipedia_en_all_nopic_01_2012/I/82f74610ae96766c69b0ad7f1a982f9d.png)